During a website redesign, re-platform, or domain change, it is not uncommon to see a reduction in organic search traffic. The panic generally starts weeks after trying one thing after another and the traffic does not return.

In this post, I'm going to walk you through a process I use to accelerate the recovery of this painful problem.

Identify the Root CausesInstead of running a website through a full search-engine-optimization checklist, which generally takes a lot of valuable time, I prefer to focus on the 20 percent of glitches that are likely causing 80 percent of the problem. This approach allows for faster, incremental recovery, leaving time to address the other issues later.

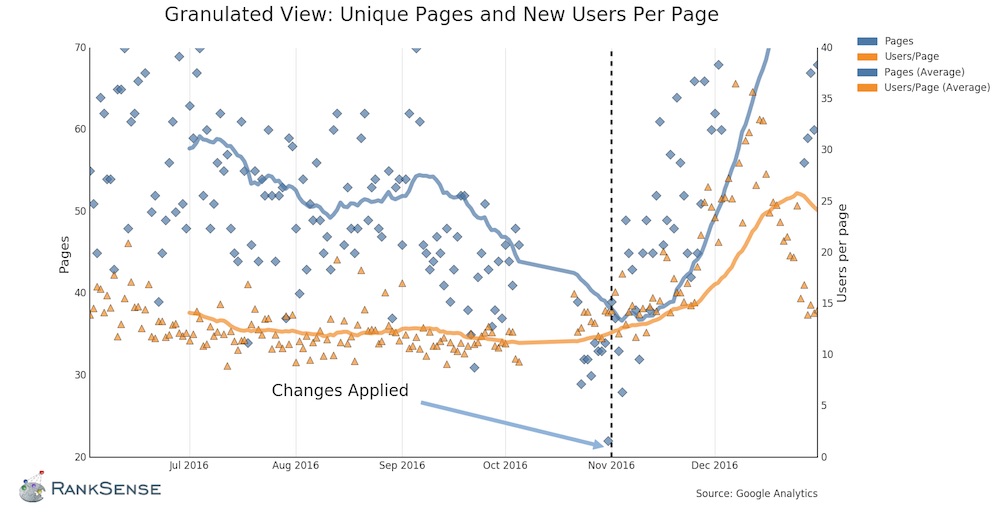

When organic traffic drops, it's typically caused by fewer pages performing, or fewer visitors per page, or both. I first try to determine which of these is the issue. If there are fewer pages performing, there potentially are indexing problems. If we have fewer visitors per page, the pages might be weak in content. If we have both issues, there could be page reputation problems — i.e., fewer quality links to the site. Duplicate content and stale content can both dilute page reputation, as can a link penalty.

Using this framework, one can isolate the problems that are likely hurting traffic. Common errors that I've seen are:

And here are the key places I quickly collect evidence:

In the next steps, each potential problem is a thesis to validate or invalidate. For this, I use data science tools to prepare the evidence collected and explore it with data visualization.

The most important part of this technique is developing the intuition to ask the right questions. Those questions should determine what data and evidence to collect, and how to process it and visualize it.

What follows is an actual investigation, with real customer data, to understand this data-driven framework.

Evidence Collection ExampleI recently helped a brand-name retailer during a re-platform and redesign project, in preparation for the 2016 holiday season. We started advising the company in June, and the new site launched at the beginning of October. But the site started to experience a downtrend in organic search traffic before then, at the beginning of September, as year-over-year organic traffic was down approximately 20 percent.

The client was hoping to reverse this trend with the new site design.

We carefully planned everything. We mapped — via 301 redirects — all of the old URLs to the new ones. We did multiple rounds of testing and review on the staging site.

Note that we did not change title tags before the launch. I prefer not to change title tags during redesigns. Instead, I prefer to change them a couple of weeks afterwards. This is because, in my experience, title tag changes can result in re-rankings. So it is better to alter them in a more controlled fashion, so you can roll back the changes if they don't improve performance.

But, regardless, a couple of weeks after the launch, organic traffic dropped even further, by roughly 40 percent over the previous year. But, thankfully, we did identify the problem.

Using our framework, we asked if we are losing pages, visitors per page, or both? We gathered the data and then graphed it, to visualize.

The number of visitors (users) per page, shown in yellow in the lower portion of the graph, remained relatively constant. But the number of pages indexed, in the blue line, decreased. Fixing the problem in November 2016 reversed the trend. Click image to enlarge.

The findings were obvious. In the graph, above, even during the downtrend there is a nearly flat number of visitors per page (see the lower, yellow line). But the pages that received traffic (shown in the blue line) dramatically dropped.

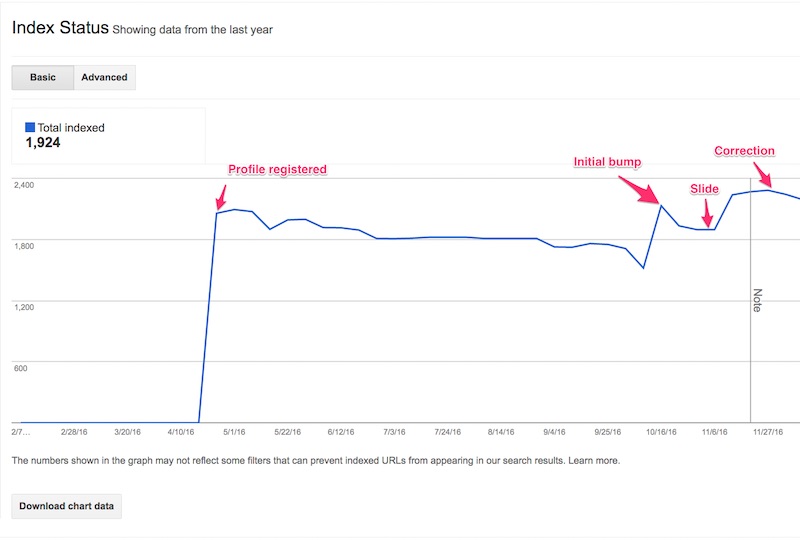

This key data analysis and visualization saved valuable time. I didn't need to look at content issues, but, instead, I could focus on indexing. We were able to confirm indexing problems while looking at Index Status in Google Search Console, as shown below.

Note an initial bump in indexed pages when the new site launched, but then the subsequent slide, and finally the correction bump at the beginning of November. Click image to enlarge.

Next, we needed to determine why Google was dropping pages. So we looked into Google Search Console URL parameters — Search Console > Crawl > URL Parameters — to find indications of an infinite crawl space.

That topic, infinite crawl spaces, merits an explanation. Infinite crawl spaces often appear in websites with extensive databases — such as most ecommerce platforms — and causes search-engine robots to continue fetching pages in an endless loop. An example of this is faceted or guided navigation, which can produce a near limitless number of options. Infinite crawl spaces waste Googlebot's crawl budget, and could prevent indexing of important pages.

In this example, however, we didn't have an infinite crawl space. If we did, we would see parameters with far more URLs than realistically exist on the site.

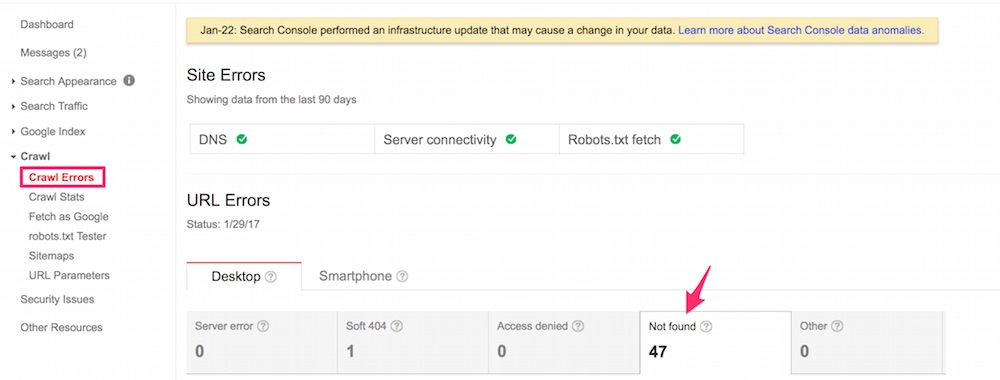

We then checked the 404 errors in Google Search Console, at Search Console > Crawl > Crawl Errors. We were surprised to see URLs as 404 errors that we had correctly mapped. We clicked on the pages reported as errors, and as we expected the correct pages redirected and loaded correctly. They didn't appear to be 404 errors.

Google Search Console reported URLs as 404 errors that had been correctly mapped. Click image to enlarge.

I then opened Google Chrome Developer Tools to repeat the same test. But, this time I checked the response from the web server. Bingo! The page loaded with the right content, but it reported to browsers and search engines that it was not found.

Google "protects" 404 pages for 24 hours. After that they are dropped from the index. We worked with the development company, which corrected the status codes. Later, we also found another problem with the redirects where they were not preserving redundant parameters from the old URL, which affects paid search tracking and other marketing initiatives. All issues were promptly resolved. After waiting for Google to re-crawl the site, the organic traffic began to increase. Ultimately, organic traffic was well above 2015 levels.

In short, if you are experiencing a downtrend in organic search traffic, do not panic. Adopt a scientific framework, such as I've described in this post. Isolate the broad reason for the decline, and then start investigating, to fix the problem.

Source: SEO: How to Quickly Reverse a Traffic Downtrend

No comments:

Post a Comment